Engineers from Rice University and the University of Maryland have created full-motion video technology that could potentially be used to make cameras that peer through fog, smoke, driving rain, murky water, skin, bone and other media that reflect scattered light and obscure objects from view.

“Imaging through scattering media is the ‘holy grail problem’ in optical imaging at this point,” said Rice’s Ashok Veeraraghavan, co-corresponding author of an open-access study published today in Science Advances. “Scattering is what makes light — which has lower wavelength, and therefore gives much better spatial resolution — unusable in many, many scenarios. If you can undo the effects of scattering, then imaging just goes so much further.”

Veeraraghavan’s lab collaborated with the research group of Maryland co-corresponding author Christopher Metzler to create a technology they named NeuWS, which is an acronym for “neural wavefront shaping,” the technology’s core technique.

“If you ask people who are working on autonomous driving vehicles about the biggest challenges they face, they’ll say, ‘Bad weather. We can’t do good imaging in bad weather.’” Veeraraghavan said. “They are saying ‘bad weather,’ but what they mean, in technical terms, is light scattering. If you ask biologists about the biggest challenges in microscopy, they’ll say, ‘We can’t image deep tissue in vivo.’ They’re saying ‘deep tissue’ and ‘in vivo,’ but what they actually mean is that skin and other layers of tissue they want to see through, are scattering light. If you ask underwater photographers about their biggest challenge, they’ll say, ‘I can only image things that are close to me.’ What they actually mean is light scatters in water, and therefore doesn’t go deep enough for them to focus on things that are far away.

“In all of these circumstances, and others, the real technical problem is scattering,” Veeraraghavan said.

He said NeuWS could potentially be used to overcome scattering in those scenarios and others.

“This is a big step forward for us, in terms of solving this in a way that’s potentially practical,” he said. “There’s a lot of work to be done before we can actually build prototypes in each of those application domains, but the approach we have demonstrated could traverse them.”

Conceptually, NeuWS is based on the principle that light waves are complex mathematical quantities with two key properties that can be computed for any given location. The first, magnitude, is the amount of energy the wave carries at the location, and the second is phase, which is the wave’s state of oscillation at the location. Metzler and Veeraraghavan said measuring phase is critical for overcoming scattering, but it is impractical to measure directly because of the high-frequency of optical light.

So they instead measure incoming light as “wavefronts” — single measurements that contain both phase and intensity information — and use backend processing to rapidly decipher phase information from several hundred wavefront measurements per second.

“The technical challenge is finding a way to rapidly measure phase information,” said Metzler, an assistant professor of computer science at Maryland and “triple Owl” Rice alum who earned his Ph.D., masters and bachelors degrees in electrical and computer engineering from Rice in 2019, 2014 and 2013 respectively. Metzler was at Rice University during the development of an earlier iteration of wavefront-processing technology called WISH that Veeraraghavan and colleagues published in 2020.

“WISH tackled the same problem, but it worked under the assumption that everything was static and nice,” Veeraraghavan said. “In the real world, of course, things change all of the time.”

With NeuWS, he said, the idea is to not only undo the effects of scattering, but to undo them fast enough so the scattering media itself doesn’t change during the measurement.

“Instead of measuring the state of oscillation itself, you measure its correlation with known wavefronts,” Veeraraghavan said. “You take a known wavefront, you interfere that with the unknown wavefront and you measure the interference pattern produced by the two. That is the correlation between those two wavefronts.”

Metzler used the analogy of looking at the North Star at night through a haze of clouds. “If I know what the North Star is supposed to look like, and I can tell it is blurred in a particular way, then that tells me how everything else will be blurred.”

Veerarghavan said, “It’s not a comparison, it’s a correlation, and if you measure at least three such correlations, you can uniquely recover the unknown wavefront.”

State-of-the-art spatial light modulators can make several hundred such measurements per minute, and Veeraraghavan, Metzler and colleagues showed they could use a modulator and their computational method to capture video of moving objects that were obscured from view by intervening scattering media.

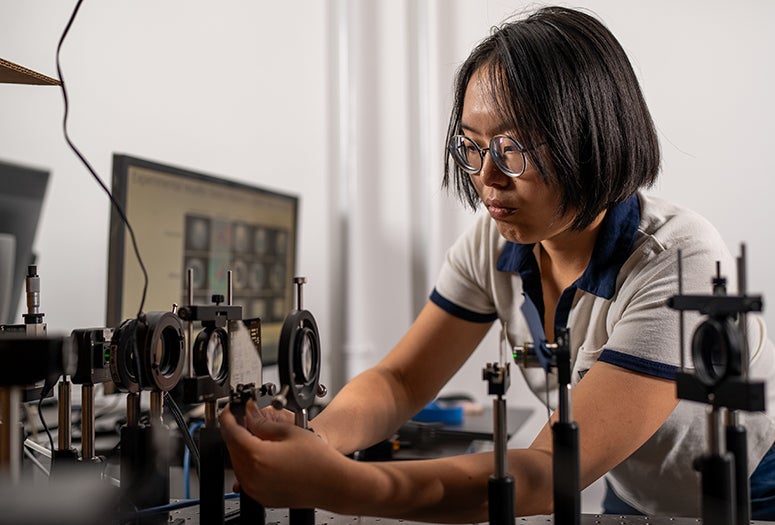

“This is the first step, the proof-of principle that this technology can correct for light scattering in real time,” said Rice’s Haiyun Guo , one of the study’s lead authors and a Ph.D. student in Veeraraghavan’s research group.

In one set of experiments, for example, a microscope slide containing a printed image of an owl or a turtle was spun on a spindle and filmed by an overhead camera. Light-scattering media were placed between the camera and target slide, and the researchers measured NeuWS ability to correct for light-scattering. Examples of scattering media included onion skin, slides coated with nail polish, slices of chicken breast tissue and light-diffusing films. For each of these, the experiments showed NeuWS could correct for light scattering and produce clear video of the spinning figures.

“We developed algorithms that allow us to continuously estimate both the scattering and the scene,” Metzler said. “That’s what allows us to do this, and we do it with mathematical machinery called neural representation that allows it to be both efficient and fast.”

NeuWS rapidly modulates light from incoming wavefronts to create several slightly altered phase measurements. The altered phases are then fed directly into a 16,000 parameter neural network that quickly computes the necessary correlations to recover the wavefront’s original phase information.

“The neural networks allow it to be faster by allowing us to design algorithms that require fewer measurements,” Veeraraghavan said.

Metzler said, “That's actually the biggest selling point. Fewer measurements, basically, means we need much less capture time. It’s what allows us to capture video rather than still frames.”

The research was supported by the Air Force Office of Scientific Research (FA9550- 22-1-0208), the National Science Foundation (1652633, 1730574, 1648451) and the National Institutes of Health (DE032051), and partial funding for open access was provided by the University of Maryland Libraries’ Open Access Publishing Fund.

- Peer-reviewed paper

-

“NeuWS: Neural Wavefront Shaping for Guidestar-Free Imaging Through Static and Dynamic Scattering Media” | Science Advances | DOI: 10.1126/sciadv.adg4671

Authors: Brandon Y. Feng, Haiyun Guo, Mingyang Xie, Vivek Boominathan, Manoj K. Sharma, Ashok Veeraraghavan and Christopher A. Metzler

- Video

-

(Video by Brandon Martin/Courtesy of Rice University)

- Image downloads

-

https://news-network.rice.edu/news/files/2023/06/0628_NEUWS-3pCc-lg.jpg

CAPTION: In experiments, camera technology called NeuWS, which was invented by collaborators at Rice University and the University of Maryland, was able to correct for the interference of light scattering media between the camera and the object being imaged. The top row shows a reference image of a butterfly stamp (left), the stamp imaged by a regular camera through a piece of onion skin that was approximately 80 microns thick (center) and a NeuWS image that corrected for light scattering by the onion skin (right). The center row shows reference (left), uncorrected (center) and corrected (right) images of a sample of dog esophagus tissue with a 0.5 degree light diffuser as the scattering medium, and the bottom row shows corresponding images of a positive resolution target with a glass slide covered in nail polish as the scattering medium. Close-ups of inset images from each row are shown for comparison at left. (Figure courtesy Veeraraghavan Lab/Rice University)https://news-network.rice.edu/news/files/2023/06/0628_NEUWS-hg33-lg.jpg

CAPTION: Rice University Ph.D. student Haiyun Guo, a member of the Rice Computational Imaging Laboratory, demonstrates a full-motion video camera technology that corrects for light-scattering, which has the potential to allow cameras to film through fog, smoke, driving rain, murky water, skin, bone and other obscuring media. Guo, Rice Prof. Ashok Veeraraghavan and their collaborators at the University of Maryland described the technology in an open-access study published this week in Science Advances. (Photo by Brandon Martin/Rice University)https://news-network.rice.edu/news/files/2023/06/0628_NEUWS-hgav92-lg.jpg

CAPTION: Rice University Ph.D. student Haiyun Guo and Prof. Ashok Veeraraghavan in the Rice Computational Imaging Laboratory. Guo, Veeraraghavan and collaborators at the University of Maryland have created full-motion video camera technology that corrects for light-scattering and has the potential to allow cameras to film through fog, smoke, driving rain, murky water, skin, bone and other light-penetrable obstructions. (Photo by Brandon Martin/Rice University)https://news-network.rice.edu/news/files/2023/06/0628_NEUWS-cm-lg.jpg

CAPTION: Christopher Metzler is an assistant professor of computer science at the University of Maryland and a Rice University “triple Owl” alumnus who earned doctorate, masters and bachelors degrees in 2019, 2014 and 2013, respectively. (Photo courtesy of C. Metzler/University of Maryland) - Related stories

-

US Army backs ‘sleeping cap’ to help brains take out the trash – Sept. 29, 2021

https://news.rice.edu/news/2021/us-army-backs-sleeping-cap-help-brains-take-out-trashAI-powered microscope could check cancer margins in minutes – Dec. 17, 2020

https://news.rice.edu/news/2020/ai-powered-microscope-could-check-cancer-margins-minutesCameras see around corners in real time with deep learning – Jan. 17, 2020

https://news.rice.edu/news/2020/cameras-see-around-corners-real-time-deep-learningFeds fund creation of headset for high-speed brain link – May 20, 2019

https://news.rice.edu/news/2019/feds-fund-creation-headset-high-speed-brain-linkPulseCam peeks below skin to map blood flow – April 13, 2020

https://news.rice.edu/news/2020/pulsecam-peeks-below-skin-map-blood-flowRice team designs lens-free fluorescent microscope – March 5, 2018

https://news2.rice.edu/2018/03/05/rice-team-designs-lens-free-fluorescent-microscope/Wearable hospital lab: NSF awards $10M for bioimaging – Feb. 28, 2018

https://news2.rice.edu/2018/02/27/wearable-hospital-lab-nsf-awards-10m-for-bioimaging/ - About Rice

-

Located on a 300-acre forested campus in Houston, Rice University is consistently ranked among the nation’s top 20 universities by U.S. News & World Report. Rice has highly respected schools of Architecture, Business, Continuing Studies, Engineering, Humanities, Music, Natural Sciences and Social Sciences and is home to the Baker Institute for Public Policy. With 4,240 undergraduates and 3,972 graduate students, Rice’s undergraduate student-to-faculty ratio is just under 6-to-1. Its residential college system builds close-knit communities and lifelong friendships, just one reason why Rice is ranked No. 1 for lots of race/class interaction and No. 4 for quality of life by the Princeton Review. Rice is also rated as a best value among private universities by Kiplinger’s Personal Finance.